In data rich enterprise environments AI models can struggle to both access and utilise scattered data to provide accurate responses.

AI assistants are limited, particularly by the data they were trained on. This can lead to isolation from an organisation’s most up to date information.

Enter the Model Context Protocol.

This open standard is designed to bridge this crucial gap. It allows AI assistants to connect across systems and secure the information access they need.

Ready to learn more? We’ve got you covered with this comprehensive guide to the Model Context Protocol, including what it is, how it works and what it can do as well as insight into the crucial M×N integration problem.

What is Model Context Protocol?

The Model Context Protocol (MCP) is an open standard designed to connect AI assistants directly to the systems where data is stored. This includes everything from business tools, content repositories and development environments.

An open standard is a set of publicly available specifications, rules, or guidelines that define how a particular technology, in this case AI, should operate. The main characteristic of an open standard is that it is fundamentally accessible, and collaborative.

Ultimately the aim of the Model Context Protocol aims to help models produce more relevant, accurate responses. Models are inherently limited as they are confined to the data they were trained on or what is manually provided inside its context window – this can make it isolated from your organisation's most relevant information.

This creates key problems for enterprises. Information silos can mean that valuable data is trapped behind custom APIs or proprietary interfaces and bottlenecks stop progress as organisations attempt to connect more tools.

Enter MCP to address this issue of how we provide AI models with safe access to the information they need, when they need it, regardless of where that information is stored.

MCP provided AI models with relevant context from external systems to allow for more accurate and personalised responses.

Beyond this the Model Context Protocol also empowers AI models to go beyond regurgitating information and actually take action within external systems. This is important for creating agentic workflows.

How Does Model Context Protocol Work?

Model Context Protocol is built upon a widely used software design pattern known as a client-server architecture.

As the name suggests, this is when a ‘client’ software program makes requests from a ‘server program’. The server is able to provide requested service or resource requests back to the client. Building on this, MCP is able to adapt this process for AI integration.

The user facing AI application where you can interact with the AI model is known as the MCP Host, the most common example of this is an AI assistant. In the Model Context Protocol it is used to manage the interactions between different AI components, it receives user requests and then ‘decides’ how to use AI tools to fulfill them.

Within the MCP host is the MCP Client, a software component that is responsible for maintaining the session with the server. The session is a continuous, interactive communication between the computer systems.

Simplistically, it functions as a translator, turning user requests into the right format for MCP.

The MCP server is a software component that works as an adaptor for an external system such as a database or CRM. The server exposes functionalities of its underlying external system so the AI can understand it

What Can MCP Servers Do?

MCP Servers work to basically tell the AI what it can do in given situations. This includes getting information from outside systems and also letting the AI do things in those systems.

Resources are used primarily for getting information. They let the AI read data from an external system without causing a "side effect," which means they don't change the system.

An example of this would be fetching purchase history from a database without changing the database itself.

Tools refer to the actions that the AI can perform. Tools do cause a "side effect" meaning they change the state of the external system. This includes tasks like sending an email or updating a record.

Prompts are reusable templates or structured workflows for communication between the LLM and the server. They can be predefined interactions, such as automatically summarising documents. Prompts help streamline common AI interactions and ensure consistency.

What Is The M×N Integration Problem?

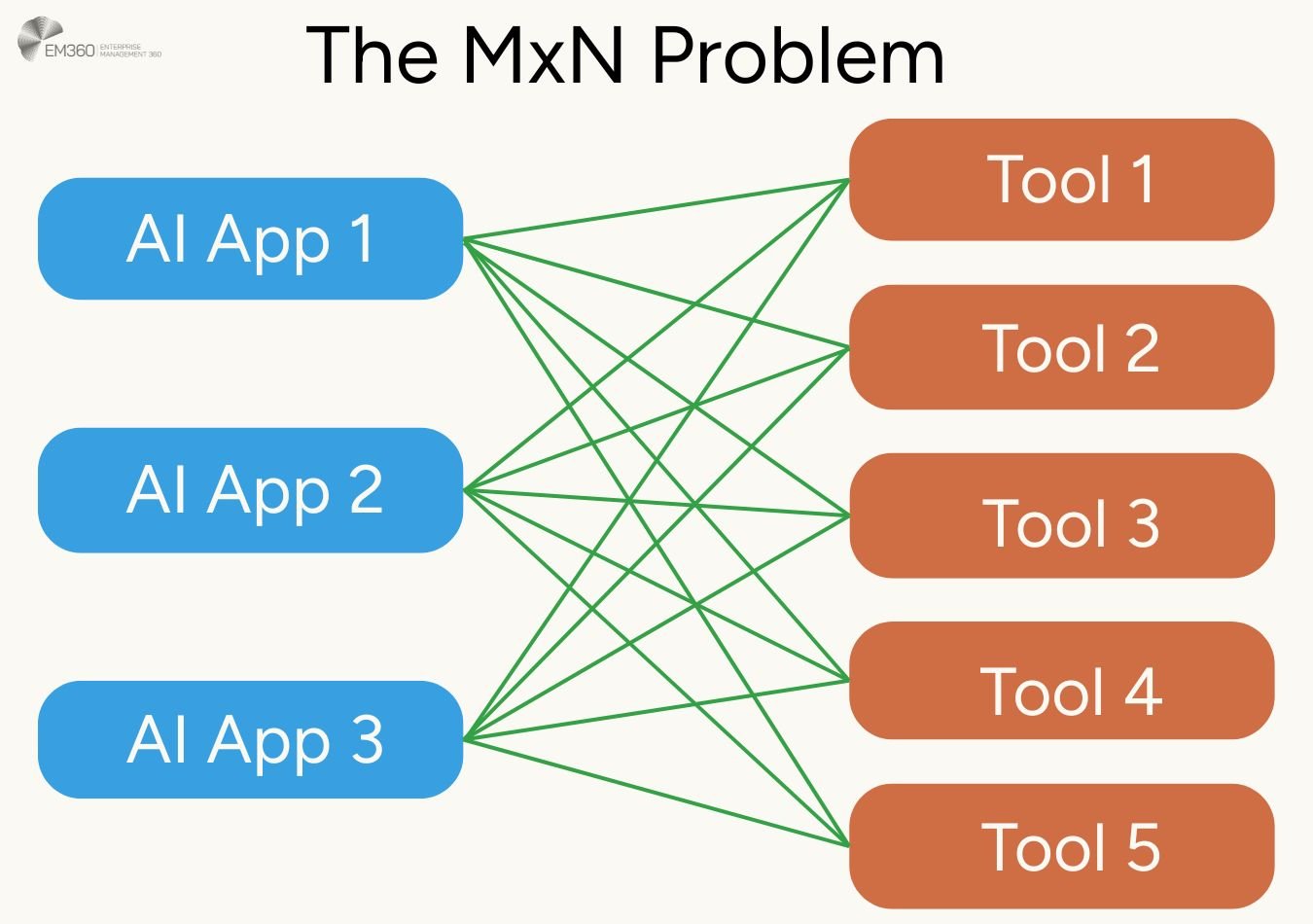

The MxN integration problem is a common software development problem. It's particularly an issue for AI software. It’s also known as the NxM integration problem.

The M×N integration problem describes a scenario where you have a set number of different applications or systems, this is “N”, that all need to connect with another set number of different external services, tools, or sources, this is “M”.

If each of the N systems needs a unique, custom-built connection to every single one of the M external services, the total number of individual integrations required not only to build but then continuously maintain is a massive number, M multiplied by N. N multiplied by M (N x M).

This presents several challenges in deploying and scaling AI. Development teams have to write bespoke code for each integration and an excessive maintenance burden is created – especially as new software is rolled out or security patches are implemented.

This increases the potential attack surface and great vulnerabilities that attackers could exploit.

How MCP solves the MxN integration problem

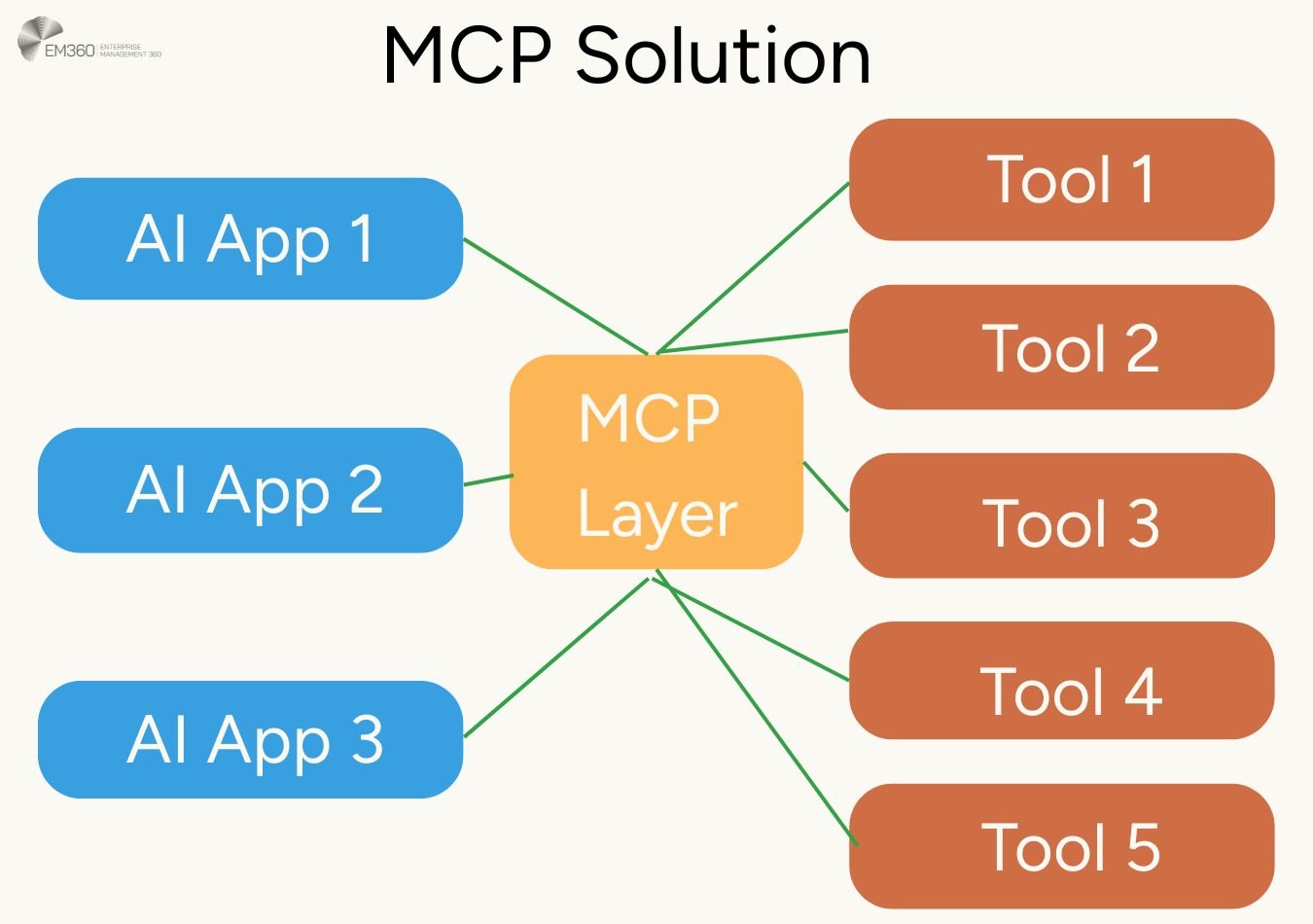

Instead of creating custom connections between every system, MCP proposes a standardised middle layer, effectively converting the "N x M" problem into an "N + M" problem.

Under the MCP model, you would build N AI clients that are designed to understand and communicate using the MCP standard.

As well as this you would build M MCP servers, with each server acting as a standardised adapter for a specific external service.

This fundamental shift can massively reduce the total number of custom integrations required. Ultimately this paves the way for much more efficient, scalable, and secure AI deployment across an enterprise.

Comments ( 0 )